Apple just lately launched MLX – or ML Discover – the corporate’s machine studying (ML) framework for Apple Silicon computer systems. The corporate’s newest framework is particularly designed to simplify the method of coaching and working ML fashions on computer systems powered by Apple’s M1, M2 and M3 collection chips. The corporate says the MLX has a unified reminiscence mannequin. Apple has additionally demonstrated the usage of the framework, which is open supply, permitting machine studying fans to run the framework on their laptop computer or laptop.

In response to particulars shared by Apple on code internet hosting platform GitHub, the MLX framework has a C++ API together with a Python API that’s carefully primarily based on NumPy, the Python library for scientific computing. Customers can even make the most of higher-level packages that allow them to construct and run extra complicated fashions on their laptop, in keeping with Apple.

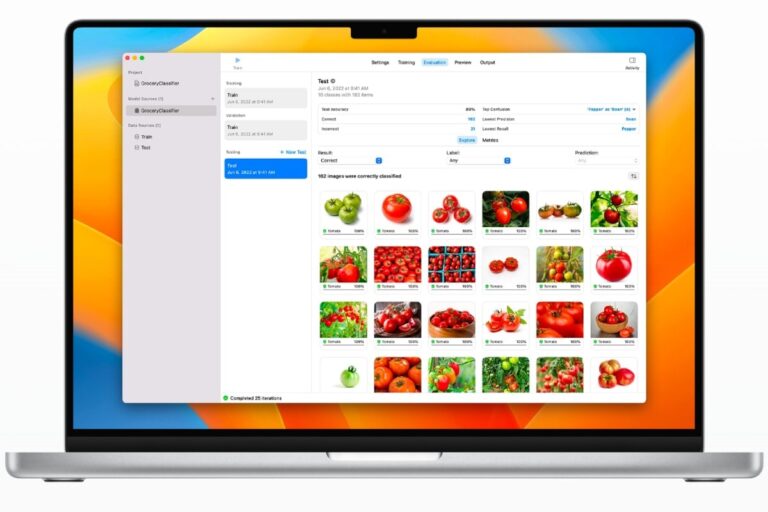

MLX simplifies the method of coaching and working ML fashions on a pc – builders had been beforehand compelled to depend on a translator to transform and optimize their fashions (utilizing CoreML). This has now been changed by MLX, which permits customers working Apple Silicon computer systems to coach and run their fashions straight on their very own gadgets.

![]()

Apple shared this picture of a giant pink signal with the textual content MLX, generated by Secure Diffusion in MLX

Photograph credit score: GitHub/ Apple

Apple says the MLX’s design follows different in style frameworks in use at present, together with ArrayFire, Jax, NumPy and PyTorch. The corporate has touted its framework’s unified reminiscence mannequin – MLX arrays reside in shared reminiscence, whereas operations on them may be carried out on all gadget varieties (presently Apple helps the CPU and the GPU) with out the necessity to create copies of information.

The corporate has additionally shared examples of MLX in motion, performing duties equivalent to picture era utilizing secure diffusion on Apple Silicon {hardware}. When producing a batch of pictures, Apple says MLX is quicker than PyTorch for batch sizes of 6,8,12 and 16 – with as much as 40 p.c greater throughput than the latter.

The assessments had been carried out on a Mac powered by an M2 Extremely chip, the corporate’s quickest processor to this point — MLX is able to producing 16 pictures in 90 seconds, whereas PyTorch would take about 120 seconds to carry out the identical process, in keeping with the corporate.

The video is a Llama v1 7B mannequin carried out in MLX and working on an M2 Extremely.

Extra right here: https://t.co/gXIjEZiJws

* Practice a Transformer LM or fine-tune with LoRA

* Textual content era with Mistral

* Picture era with secure diffusion

* Speech recognition with Whisper pic.twitter.com/twMF6NIMdV— Awni Hannun (@awnihannun) 5 December 2023

Different examples of MLX in motion embody producing textual content utilizing Meta’s open supply LLaMA language mannequin in addition to the Mistral massive language mannequin. AI and ML researchers can even use OpenAI’s open supply Whisper software to run the speech recognition fashions on their laptop utilizing MLX.

The discharge of Apple’s MLX framework might assist make ML analysis and growth simpler on enterprise {hardware}, finally permitting builders to carry higher instruments that can be utilized for apps and companies that supply on-device ML features that run effectively on a person’s laptop.